You’ve seen the headlines… an AI chatbot confidently invents legal precedents; a generative art tool creates a person with three hands; an intelligent assistant swears it’s a human trapped in a computer. The media, and even many in the tech industry, have a go-to term for these bizarre, factually incorrect outputs: hallucination.

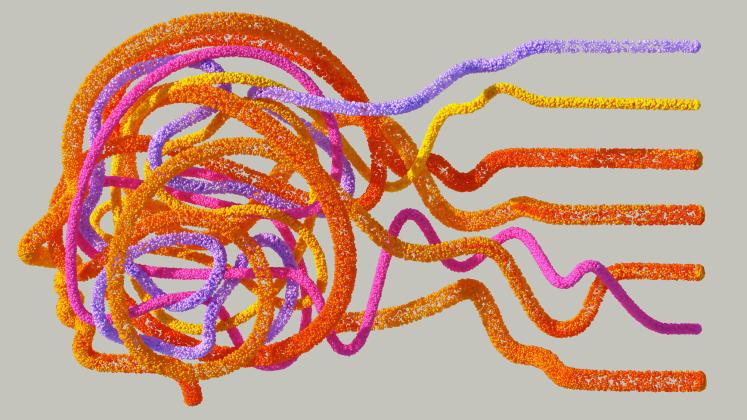

It’s a catchy, evocative word. It’s also a lazy and dangerously misleading metaphor. By anthropomorphizing a technical flaw with a term borrowed from human psychology, we obscure what’s happening, hinder public understanding and ultimately slow our progress toward building more reliable AI systems.

When a human hallucinates, they are having a profound sensory experience of something that isn’t there. It implies a break from reality, a bug in perception or cognition. But an AI is not perceiving reality in the first place.

An AI is not perceiving reality in the first place.

Large language models are, at their core, incredibly sophisticated pattern-matching machines. They are trained on vast amounts of text and code, learning statistical relationships between words. In essence, they identify an incredibly complex ‘hidden Markov model’ for language, attempting to infer the hidden context or meaning that dictates the sequence of observable words.

When you give one a prompt, it doesn’t think or understand. It simply predicts the next most plausible word based on that model, then the next, and the next — stringing them together to form a coherent-sounding response.

An AI 'hallucination’ is not a psychotic break. It is a prediction error rooted in its core design. The system operates on a principle of maximum likelihood, always trying to generate the most statistically probable sequence of words.

An AI 'hallucination’ is not a psychotic break. It is a prediction error rooted in its core design.

This creates several problems. First, for any prompt, there are multiple optimum paths to a coherent answer, and the model can’t always distinguish a factually correct path from a plausible fabrication. Second, this focus on likelihood can cause it to misinterpret rare but accurate information — events at the tail end of its probability distribution — as statistical noise. Finally, the system is designed always to provide an answer. It lacks an inherent mechanism for saying “I don’t know”.

Suppose it can’t find a factual answer. In that case, it will generate a plausible-sounding one, highlighting the critical need for developers to incorporate confidence intervals, a way for the model to signal how certain it is about its response. It’s not seeing a ghost in the machine; it’s simply extending a pattern without a grounding in factual reality.

Calling this a ‘hallucination’ is harmful for several reasons.

First, it mystifies the problem. It makes the error sound like an unknowable and uncontrollable glitch in an emerging consciousness. This prevents a clear-eyed discussion of the real, tangible causes: incomplete or biased training data, the inherent limitations of a model that lacks true comprehension, or the absence of robust, real-time fact-checking mechanisms. We can’t fix a problem we refuse to describe accurately.

Second, it misleads the public. The metaphor encourages people to think of AI as a flawed, human-like mind that can lose touch with reality. This narrative fuels both breathless hype and dystopian fear, creating a polarized discourse that distracts from the more practical and immediate questions of data provenance, algorithmic bias, and responsible implementation. It’s a barrier to genuine AI literacy.

Finally, it lets developers and companies off the hook. Framing a model’s fabrication as a hallucination subtly shifts the blame to the non-sentient AI. It’s presented as an unfortunate, almost charmingly human-like quirk, rather than what it is: a predictable failure mode of a specific technical architecture. We should be talking about ‘data-driven fabrication’, ‘model confabulation’, or ‘factual inconsistency’.

To build and integrate AI into our society responsibly, we must speak about it with precision.

These terms are less trendy, but they correctly place the focus on the data and the model’s design, not on the imagined inner life of a machine.

To build and integrate AI into our society responsibly, we must speak about it with precision. We need to stop using misleading metaphors that treat these systems like magical black boxes or nascent minds.

An AI-generated falsehood isn’t a dream or a vision. It’s a computational artifact — an error we can and should work to mitigate through better data, better algorithms and better engineering. It’s time to describe AI’s failures with technical accuracy, not psychiatric poetry.

Suggested citation: Tshilidzi Marwala. "The Concern Around Saying AI ‘Hallucinates’," United Nations University, UNU Centre, 2025-08-25, https://unu.edu/article/concern-around-saying-ai-hallucinates.